Mirai raises $10M to build the on-device AI capability layer.

By

Mirai team

Feb 19, 2026

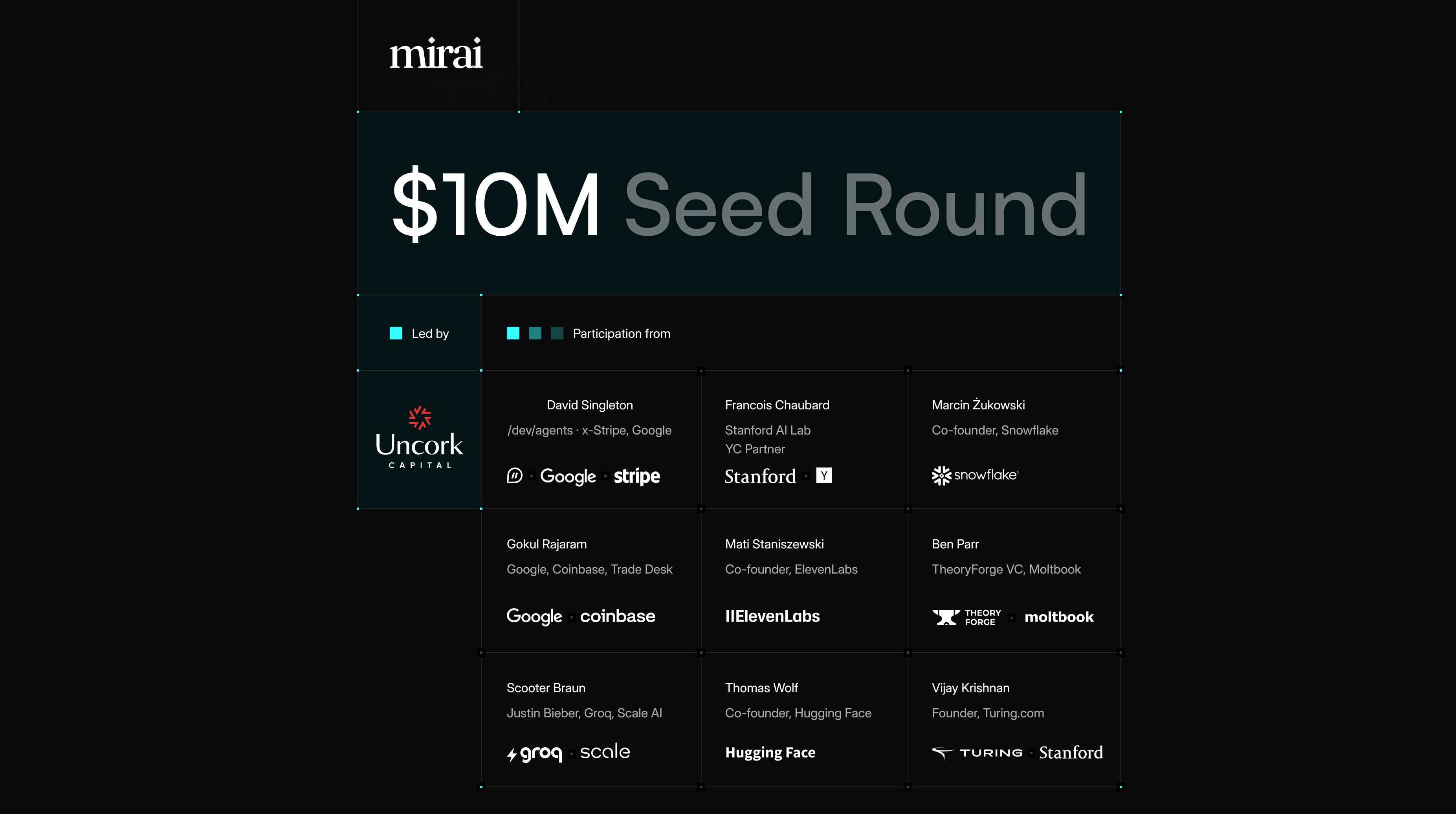

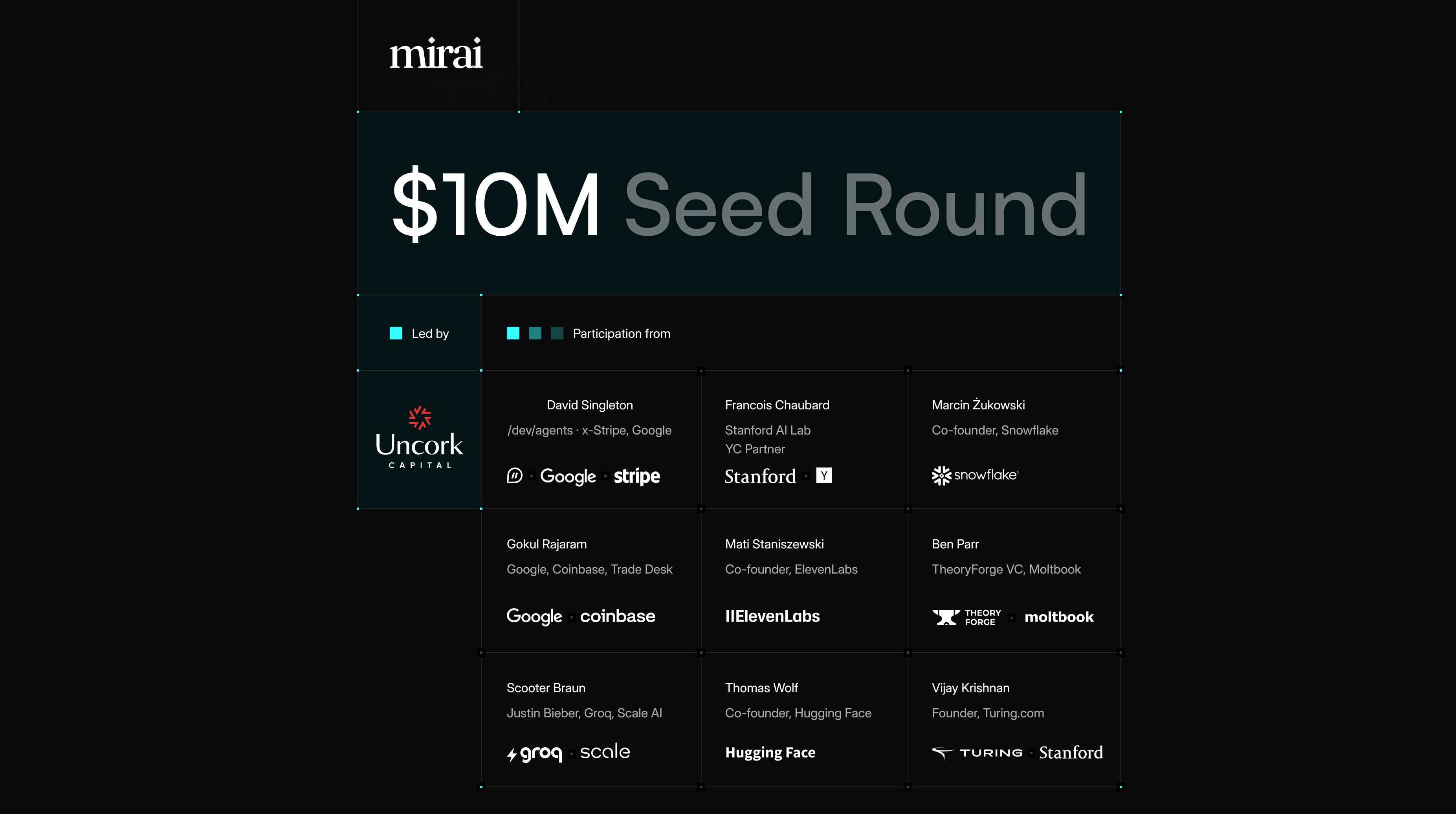

Mirai raised $10M in seed funding led by Uncork Capital to make on-device inference accessible to developers.

Modern devices already ship with dedicated AI silicon. Most AI applications still default to the cloud because running models locally requires deep systems expertise across memory management, kernel optimization, and hardware-aware execution.

Mirai removes that barrier.

Mirai built a proprietary inference engine optimized for Apple Silicon. Developers integrate it in a few lines of code, without touching low-level systems complexity. Existing cloud pipelines continue to work, with optional hybrid routing between device and cloud.

On certain model–device pairs:

up to 37% faster generation

up to 59% faster prefill

consistent gains over MLX and llama.cpp

AI is a three-body problem

AI performance is not just about better models.

It is the interaction of three moving parts:

Model × Runtime × Hardware

Most teams optimize only one variable.

Mirai focuses on the interaction layer, the runtime that binds models to hardware efficiently. Without a hardware-aware execution layer, even strong models underperform. With it, smaller models become viable, latency drops, and cost structures change.

Inference becomes the execution layer of AI software.

The shift

Personal computing always wins over thin clients.

AI is following the same trajectory.

A new paradigm is emerging: smaller, highly capable models that sacrifice encyclopedic knowledge for responsiveness and system-level integration. They run always-on, directly on personal devices.

What they lack in breadth, they compensate for with:

ultra-low interaction latency

direct access to local state and context

privacy by default

offline continuity

Inference is becoming a system layer. Not a backend API.

Mirai is building that layer.

What’s next

Mirai is expanding beyond Apple Silicon and extending support across text, voice, vision, and multimodal workloads.

The goal is simple: make on-device inference the default execution path for AI applications.

If you’re excited to work on on-device AI using a combination of inventive research, design, and engineering in a small, talent-dense group, we'd love to hear from you.

Mirai raised $10M in seed funding led by Uncork Capital to make on-device inference accessible to developers.

Modern devices already ship with dedicated AI silicon. Most AI applications still default to the cloud because running models locally requires deep systems expertise across memory management, kernel optimization, and hardware-aware execution.

Mirai removes that barrier.

Mirai built a proprietary inference engine optimized for Apple Silicon. Developers integrate it in a few lines of code, without touching low-level systems complexity. Existing cloud pipelines continue to work, with optional hybrid routing between device and cloud.

On certain model–device pairs:

up to 37% faster generation

up to 59% faster prefill

consistent gains over MLX and llama.cpp

AI is a three-body problem

AI performance is not just about better models.

It is the interaction of three moving parts:

Model × Runtime × Hardware

Most teams optimize only one variable.

Mirai focuses on the interaction layer, the runtime that binds models to hardware efficiently. Without a hardware-aware execution layer, even strong models underperform. With it, smaller models become viable, latency drops, and cost structures change.

Inference becomes the execution layer of AI software.

The shift

Personal computing always wins over thin clients.

AI is following the same trajectory.

A new paradigm is emerging: smaller, highly capable models that sacrifice encyclopedic knowledge for responsiveness and system-level integration. They run always-on, directly on personal devices.

What they lack in breadth, they compensate for with:

ultra-low interaction latency

direct access to local state and context

privacy by default

offline continuity

Inference is becoming a system layer. Not a backend API.

Mirai is building that layer.

What’s next

Mirai is expanding beyond Apple Silicon and extending support across text, voice, vision, and multimodal workloads.

The goal is simple: make on-device inference the default execution path for AI applications.

If you’re excited to work on on-device AI using a combination of inventive research, design, and engineering in a small, talent-dense group, we'd love to hear from you.

Deploy and run models of any architecture directly on Apple devices.

Deploy and run models of any architecture directly on Apple devices.

On-device layer for AI model makers & products.

On-device layer for AI model makers & products.

Learn more